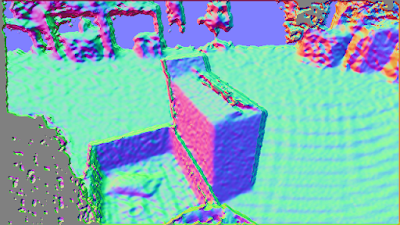

The RealSense tracking camera T265 outputs rotation as a quaternion, and translation as a vector.

This is all the background needed to understand point rotation using quaternions p' = qpq'

Format of a quaternion q:

The quaternion format displayed is [x,y,z,w], where x,y,z is the vector portion and w is the scalar.

For clarity, lets use this notation to talk about quaternions [qw, qx, qy, qz].

Where the realsense quaternion output is just re-ordered and re-labeled. w is now qw, x is now qx, y is now qy and z is now qz.

qw contains the information about the amount of rotation around the axis contained in qx, qy, qz.

BUT THIS IS IMPORTANT:

qx, qy, qz is not directly the vector of rotation.

qw = cos( angle of rotation / 2)

qx = sin( angle of rotation / 2) * the x component of the vector of rotation.

qy = sin( angle of rotation / 2) * the y component of the vector of rotation.

qz = sin( angle of rotation / 2) * the z component of the vector of rotation.

Finding Angle of rotation and axis of rotation vector

If you want to find the angle of rotation around the axis vector calculate the arccosine of qw and multiply by 2:

angle of rotation = 2 * (arccos (qw))

To find the vector that is the axis of rotation qx, qy, and qz must be divided by the sin(angle of rotation/2)

axis vector x component = qx / sin( angle of rotation / 2)

axis vector y component = qy / sin( angle of rotation / 2)

axis vector z component = qz / sin( angle of rotation / 2)

Conjugate quaternion q'

Rotating the same angle around the opposite vector reverses the rotation. The vector is simply multiplied by -1. This is considered the conjugate because the sign of the axis vector components are all reversed. The conjugate quaternion is needed to flip the quaternion frame of reference when a quaternion is used to rotate a point in space. The angle of rotation does not change, only the vector is inverted.

To create a conjugate quaternion do the following

qw' = conjugate qw = qw = cos( angle of rotation / 2)

qx' = conjugate qx = -1 * sin( angle of rotation / 2) * the x component of the vector of rotation.

qy' = conjugate qy = -1 * sin( angle of rotation / 2) * the y component of the vector of rotation.

qz' = conjugate qz = -1 * sin( angle of rotation / 2) * the z component of the vector of rotation.

Convert the point to a quaternion p

A point can be represented as a vector. We will use px, py, pz as our point notation. To create a quaternion from the point just add pw with a value of zero. But remember that has to be equal to the cosine of the rotation divided by 2. The arccos(0) = 90 degrees. Multiplied by 2 means the rotation of the point around itself is180 degrees. [It's confusing I know. It's all about getting out of a 4D rotation.]

The axis vector of rotation is the point px, py, pz. That is multiplied by the sine of 180/2. That ends up being the sine of 90 degrees which equals1.

point quaternion pw = 0 = cos (180/2) = cos (90) = 0

point quaternion px = px * sin (180/2) = px * sin(90) = px * 1 = px

point quaternion py = py * sin (180/2) = py * sin(90) = py * 1 = py

point quaternion pz = pz * sin (180/2) = pz * sin(90) = pz * 1 = pz

The new point location p'

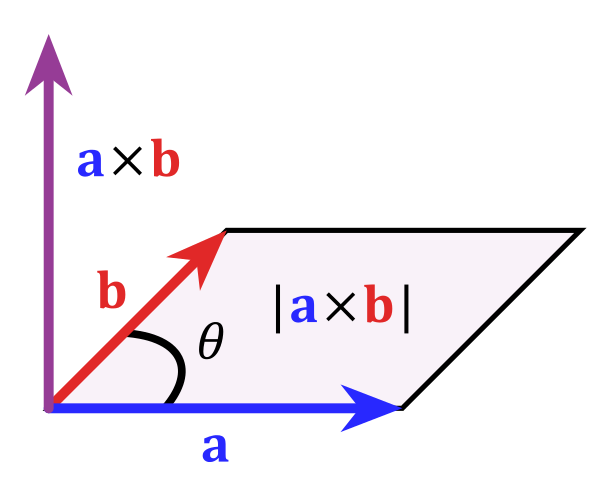

To calculate the quaternion rotated location of a point use the formula [NOTE: using quaternion multiplication]

p' = qpq'

The order of operation is quaternion p multiplied with q'. The resulting quaternion m is multiplied by q' to create p'. m = pq' and then p' = qm

The values in px', py', and pz' are the rotated x,y,z of the point.

px' = new point location x

py' = new point location y

pz' = new point location z

Quaternion multiplication

A quaternion is also written as (qw + qxi + qyj + qzk), where i,j,k represent square root of -1 along 3 complex (imaginary number) axes. I'm ignoring the 4D complex stuff here because it confused me for a long time. Lets just look at what happens if you multiply using this format:

(pw + pxi + pyj + pzk) (qw + qxi + qyj + qzk) =

pw*qw + pw*qxi + pw*qyj + pw*qzk + px*qwi - px*qx + px*qyk - px*qzj + py*qwj - py*qxk - py*qy + py*qzi + pz*qwk + pz*qxj - pz*qyi - pz*qz

= (pw*qw - px*qx - py*qy - pz*qz) + (pw*qx + px*qw + py*qz - pz*qy)i + (pw*qy - px*qz + py*qw + pz*qx)j + (pw*qz + px*qy - py*qx + pz*qw)k

Multiplication is distributive, and there is a multiplication table for i*j*k = -1 (see blow).

The resulting quaternion product of this multiplication is:

[Note: I decided to call this quaternion m]

mw = (pw*qw - px*qx - py*qy - pz*qz)

mx = (pw*qx + px*qw + py*qz - pz*qy)

my = (pw*qy - px*qz + py*qw + pz*qx)

mz = (pw*qz + px*qy - py*qx + pz*qw)

i*j*k = -1

The multiplication table for i j k

1 i j k

1 1 i j k

i i −1 k −j

j j −k −1 i

k k j −i −1

https://en.wikipedia.org/wiki/Quaternion

Donate

.svg/480px-Sphere_(parameters_r%2C_d).svg.png)